and capture the full potential of your cloud business

In today’s It ecosystem, the cloud has become synonymous with flexibility and efficiency. Though, all that glitters is not gold since applications with fixed usage patterns often continue to be deployed on-premises. This leads to hybrid cloud environments creating various data management challenges. This article describes available solutions to tackle risks such as scattered data silos, vendor lock-ins & lack of control scenarios.

The famous quote of Henry Ford “If you always do what you’ve always done, you’ll always get what you’ve always got” describes pretty accurately what happens once you stop challenging current situations to improve them for the future: you become rigid and restricted in your thinking with the result of being unable to adapt to new situations. In today’s business environment, data is considered as the base to help organizations succeed in their digital transformation by deriving valuable information leading eventually to a competitive advantage. The value of data has also been recognised by the Economist in May 2017, stating that data has replaced oil as the world’s most valuable resource.

Why is that? The use of smartphones and the internet have made data abundant, ubiquitous, and far more valuable since nowadays almost any activity creates a digital trace no matter if you are just taking a picture, having a phone call or browsing through the internet. Also, with the development of new devices, sensors, and emerging technologies, there is no doubt, that the amount of data is further growing. According to the IBM Marketing Cloud report, “10 Key Marketing Trends For 2017,” 90 % of the data in the world today has been created in the last two years from which the majority is unstructured.

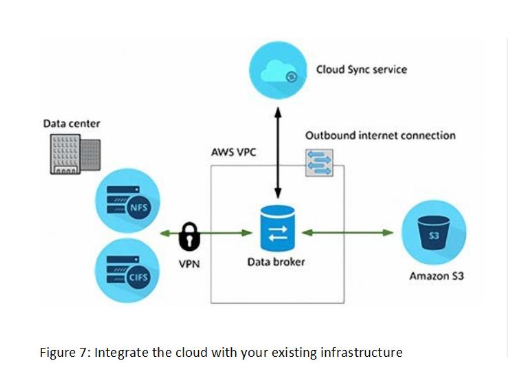

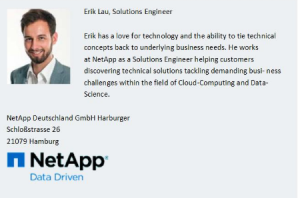

To become an understanding of this, figure 1 illustrates the number of transactions executed every 60 seconds for a variety of data related products within the ecosystem of the internet. Estimates suggest that by 2020 about 1.7 MB of new data will be created every second for every human on the planet leading to 44 zettabytes of data (or 44 trillion gigabytes). The exploding volumes of data changes the nature of competition in the corporate world. If an organization is able to collect and process data properly, the product scope can be improved based on specific customer needs which attracts more customers, generating even more data and so on. The value of data can also be illustrated within the Data-Information-Knowledge-Wisdom (DIKW) Pyramid referring back to the initial quote (figure 2). Typically, information is defined in terms of data, knowledge in terms of information, and wisdom in terms of knowledge hence. Data is considered as the initial base to gain wisdom. As a result, the key to success in the digital era is to maximize the value of data. That might mean improving the customer experience, making information more accessible to stakeholders, or identifying opportunities that lead to new markets and new customers.

Figure 1: 60 seconds in the internet

All that glitters is not gold

In addition to the described observation that the quantity of data is growing exponentially further challenges can be derived for the following three categories:

- Distributed: Data is no longer located at one location such as your local data centre. Data relevant for enterprises is distributed across multiple locations.

- Diverse: Data is no longer available just in a structured format. As already mentioned before, most of the data being created is considered as unstructured data such as images, audio-/video files, emails, webpages, social media messages etc.

- Dynamic: Given the described increase in quantity, data sets grow quickly and can change over time. Hence, it is difficult to keep track of the state where the data is located and where it came from.

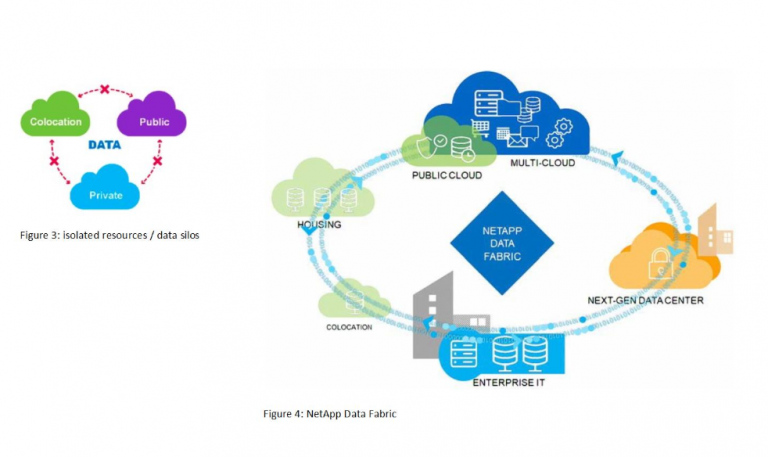

According to the IDC study: “Become a Data Thriver: Realize Data-Driven Digital Transformation (2007)”, leading digital organizations have discovered that the cloud, with its power to deliver agility and flexibility has the ability to tackle the described challenges and is indispensable for achieving their digital transformation. Cloud computing is therefore aiding the business to stay flexible and efficient in an ever-changing environment. It enables customers to deploy services or run applications with varying usage needs that allows you to pay what you need, when you need it. This realization leads most organizations to hybrid IT environments, in which data is generated and stored across a combination of on-premises, private cloud, and public cloud resources. The existence of a hybrid IT environment is probably the result of an organic growth and might be more tactical than strategical. Different lines of business in the organization are likely using whatever tools they need to get their jobs done without involving the IT department. This approach creates numerous challenges for IT teams, such as knowing what data is where, protecting and integrating data, securing data and ensuring compliance, figuring out how to optimize data placement, and seamlessly moving data into and out of the cloud as needed. The question regarding the data movement becomes even more crucial with regards to potential vendor lock-ins. To address those challenges, organizations must invest in cloud services while developing new data services that are tailored to a hybrid cloud environment. Deploying data services across a hybrid cloud can help organizations to respond faster and stay ahead of the competition. However, all the data in the world won’t do your organization any good if the people who need it can’t access it. Employees at every level, not just executive teams, must be able to make data-driven decisions. To support organizations in their digital transformation process by creating new and innovative business opportunities fuelled by distributed, divers and dynamic data sets, organizations often find their most valuable data trapped in silos, hampered by complexity and too costly to harness (figure 3). To undermine this statement, industry research from RightScale identified, that organizations worldwide are wasting, on average a staggering 35 % of their cloud investment. Or, to put it in monetary terms, globally over $ 10 billion is being misspent in the provisioning of cloud resources each year.

Every cloud has a silver lining

The ultimate goal of the described problem should be, that business data needs to be shared, protected and integrated at corporate level, regardless where the data is located. Although organizations can outsource infrastructure and applications to the cloud, they can never outsource the responsibility they have for their business data. Organizations have spent years controlling and aligning the appropriate levels of data performance, protection, and security in their data centre to support applications. Now, as they seek to pull in a mix of public cloud resources for infrastructure and apps, they need to maintain control of their data in this new hybrid cloud. They need a single, cohesive data environment, which is a vendor-agnostic platform for on-premises and hybrid clouds to give them control over their data. A cloud strategy is only as good as the data management strategy that underpins it and if you can’t measure it, you can’t manage it. The starting point to establish an appropriate cloud strategy is to become an insight of the data available for being able to control it. This implies that the data locations need to be identified and additional attributes concerning performance, capacity, and availability it requires and what the storage costs are. After, the data can be integrated to cloud data services extending the capabilities within the areas of: backup and disaster recover management, DevOps, production workloads, cloud-based analytics etc.

The following section describes a data management solution by using NetApp’s Data Fabric as an example, though there are a variety of vendors offering similar solution. (Editor’s note)

NetApp’s Data Fabric (figure 4) empowers organizations to use data for being able to make intelligent decisions about how to optimize their business and get the most out of their IT infrastructure. They provide essential data visibility and insight, data access and control, and data protection and security. With it, you can simplify the deployment of data services across cloud and on-premises environments to accelerate digital transformation to gain the desired competitive advantage. A short description for use-cases within the area of storage, analytics and data provisioning within a hybrid cloud environment is described below, tackling the main issues described earlier.

Cloud Storage

NetApp offers several services and solutions to address data protection and security needs, including:

– Backup and restore services for SaaS services such Office365 and Salesforce

– Cloud-integrated backup for on-premises data

– End-to-end protection services for hybrid clouds

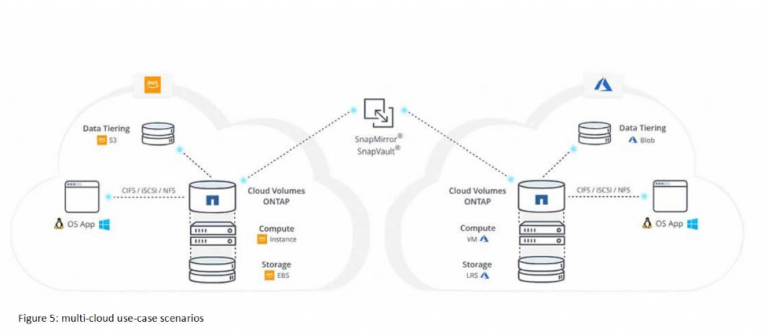

NetApp Cloud Volumes Service offers consistent, reliable storage and data management with multiprotocol support for MS Azure, AWS and Google Cloud Platform, enabling existing file-based applications to be migrated at scale and new applications to consume data and extract value quickly (figure 5).

Furthermore, it enables you to scale development activities in AWS and Google Cloud Platform, including building out developer workspaces in seconds rather than hours, and feeding pipelines to build jobs in a fraction of the time. Container-based workloads and microservices can also achieve better resiliency with persistent storage provided by Cloud Volumes Service.

Azure NetApp Files similarly enables you to scale development and DevOps activities in Microsoft Azure all in a fully managed native Azure service. NetApp Cloud Volumes ONTAP® services enable developers and IT operators to use the same capabilities in the cloud as on-premises, allowing DevOps to easily span multiple environments.

Cloud Analytics

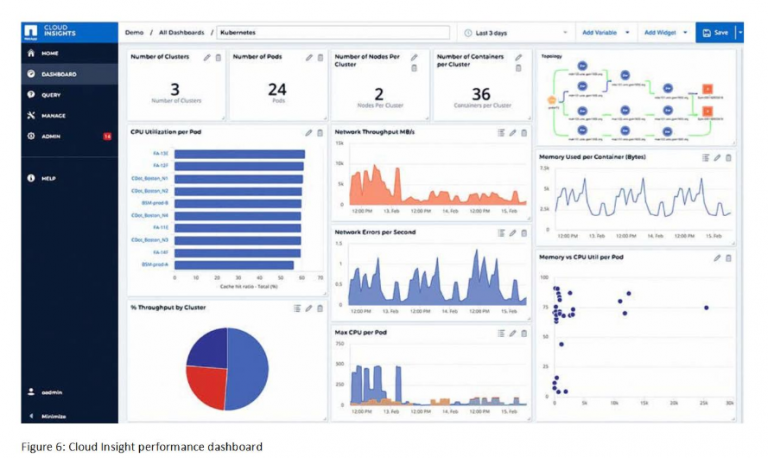

Since IT infrastructures are growing more complex and administrators are asked to do more with fewer resources. While businesses depend on infrastructures that span on-premises and cloud, administrators responsible for these infrastructures are left with a growing number of inadequate tools that leads to poor customer satisfaction, out of control costs, and an inability to keep pace with innovation. NetApp Cloud Insights is a simple to use SaaS-based monitoring and optimization tool designed specifically for cloud infrastructure and deployment technologies. It provides users with real-time data visualization of the topology, availability, performance and utilization of their cloud and on-premises resources (figure 6).

Cloud Data Services – Cloud Sync

Transferring data between disparate platforms and maintaining synchronization can be challenging for IT. Moving from legacy systems to new technology, server consolidation and cloud migration, all require large amounts of data to be moved between different domains, technologies and data formats. Existing methods such as relying on simplistic copy tools or homegrown scripts that must be created, managed and maintained can be unreliable or not robust enough and fail to address challenges such as:

– Effectively and securely getting a dataset to the new target

– Transforming data to the new format and structure

– Timeframe and keeping it up to date

– Cost of the process

– Validating migrated data is consistent and complete

One of the biggest difficulties in moving data is the slow speed of data transfers. Data movers must move data between on-premises data centres, production cloud environments and cloud storage as efficiently as possible.

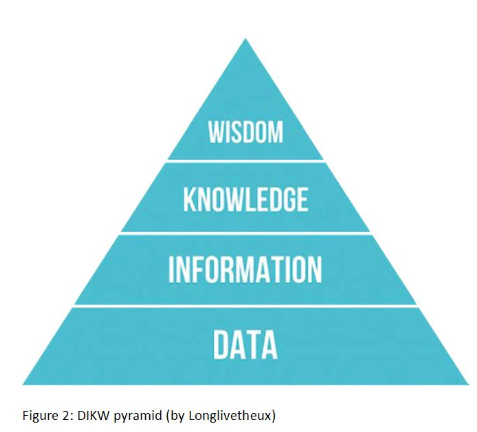

NetApp Cloud Sync is designed to specifically address those issues, making use of parallel algorithms to deliver speed, efficiency and data integrity. The objective is to provide an easy to use cloud replication and synchronization service for transferring files between on-premises NFS or CIFS file shares, Amazon S3 object format, Azure Blob, IBM Cloud Object Storage, or NetApp StorageGRID (figure 7).