If you are willing to accelerate your cloud business, you will most likely be looking at setting up automated pipelines and on automating at least parts of your operations. What if I told you this already exists – and it frees you from handling it yourself?

Interested? Read on!

The IaaS-Story

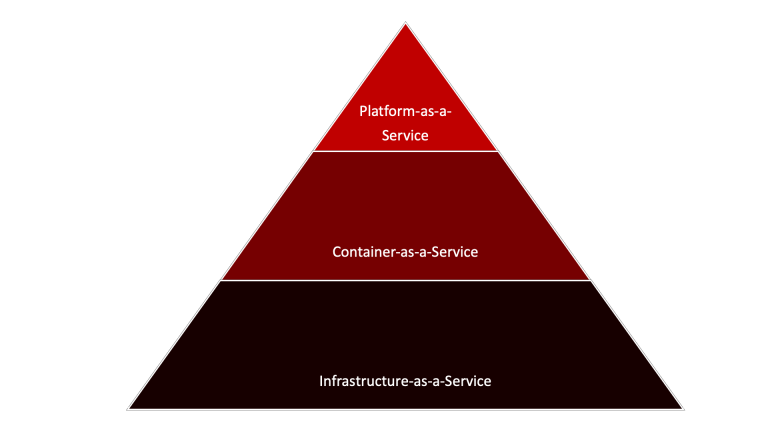

The first thing – and quite frankly: For many organizations unfortunately the only thing – one will be working with when migrating or operating in a cloud environment, is the Infrastructure-as-a-Service (IaaS)-layer. This is where one provisions its virtual machines and infrastructures using web- and CLI-based controls.

The advantages of IaaS are as follows: Since one outsourced infrastructure into an operated datacenter, the flexibility grows as infrastructure is taken from a shared resource pool. It allows to deploy VMs faster and ensures a certain layer of security, since all reasonable cloud providers operate a dedicated security team and infrastructures. It saves costs.

Operations of the provided infrastructure is usually in the hand of the customer; the cloud provider operates the underlying foundations and services. This is an often overlooked and ignored fact: Operational expenses are still there. And they tend to grow, as well as infrastructural expenses, at least as long as dedicated governance-, operations- and deployment-processes are missing or not executed upon strictly.

Infrastructure-as-a-Service therefore can only mark the beginning of a cloud journey, since it is a technical aspect, and does not cover deployment, governance, mindset and overall processes. This is understood by many organizations, which usually then step up their game in automating deployments by setting up and customizing CI/CD build- and deployment-pipelines. But there is a problem with this…

CI/CD: Manually automating deployments

Traditionally, Continuous Integration / Continuous Delivery (CI/CD) is understood as one major step towards a modern cloud environment. And the idea is absolutely right: You automate your build- and your deployment processes. Using the right tools, you can create a complete end-to-end solution, greatly benefitting speed and reliability of your deployments.

Jenkins and Bamboo are typical CI/CD-platforms

Tools typically used for CI/CD are Jenkins, Jenkins X or Bamboo – which understand themselves as automation servers. Their focus is to provide with a platform for automation, but not with an automated platform. This tiny difference turns out to be very fundamental, since an automation server is about a platform, but not about automated automation.

Problem with an automation platform-approach: Working with such a platform is complex, it takes a lot of time, and it is challenging. An engineer being responsible for CI/CD-pipelines can work literally around the clock to automate and maintain such a pipeline. It is obviously an enormous effort, and it is not cost-effective, although one usually still saves money compared to manual processes by benefitting from automated and reusable processes and approaches.

Manually automating operations

CI/CD is a way of automatically building your workflows and deploying them into production. But it says nothing about operating production grade workflows and infrastructures. This needs to be handled by an ops-team. Usually, more sophisticated teams try to automate operations – using central management tools, providing customizable scaling and health rules, etc.

Ansible and Terraform, Grafana and Prometheus

These efforts can be supported by using the right kind of middleware – i.e. Kubernetes provides many functionalities for automated operations and automated health. Unfortunately, this only applies to containerized workloads, and even in a context of containers, automated operations and automated health is to be set up and configured for each and every deployment. Kubernetes is a very sophisticated tool in regard to automation, but it does not guess and does not apply advanced rulesets on its own.

If we bring infrastructures, such as databases or message queuing systems, into the ecosystem, this even worsens. These kinds of infrastructures are notorious for their inherent complexity in set-up and operations. Operational teams being responsible for such infrastructures are required to be experts, they need to have the appropriate mindset and automation skills – just to roll these infrastructures out and integrate them with monitoring- and operational toolchains, not to speak about setting up automated operations and failure management.

Obviously, the attempt to set up and automate operations based on traditional approaches and infrastructures is very cost-intensive and requires a lot of effort. Still, it is cheaper to invest into such approaches and to learn these infrastructures, than trying to run and operate complex cloud-native and cloud-based infrastructures manually. Therefore, manually automating operations by integrating infrastructures and by writing rulesets, accompanied by infrastructure-automation is a very valid, although expensive, way to automated operations.

Automated Platform-as-a-Service

Did you notice the pattern? Automation is expensive, be it on the CI/CD-side of things or on the operational level. It requires a lot of knowledge, skills, mindset and experience – and will still be a financial challenge.

What, if it was not necessary to invest so much effort and money? What if parts of deployment and operations could be handled automatically, without additional manual intervention? What if it would just work?

If you feel like this would sound too good to be true, rest assured: It is not. The approach and the solution we will discuss, cannot solve all issues and challenges, but it will take away a lot of pain, specifically in areas of manual automation.

First, let us discuss the approach required in easing development and operational pains: Platform-as-a-Service. This term describes an approach, where components and infrastructures are rolled out and operated for development- and operational teams. Automatically, and deeply integrated into Infrastructure-as-a-Service and Container-as-a-Service-environments. The name of the approach contains the “as-a-Service”-portion, making it obvious to the reader, that whatever is deployed and operated by this approach will be made available as a service, which implies that has not to be operated by the customer and is simply working, since someone (or something) takes care of all of the technical granularity and details.

Platform-as-a-Service abstracts underlying complexity

This, quite naturally, comes at a price: An environment fulfilling this promise has to be biased and will be hard to maintain itself, it will be complex to be integrated and to be rolled out. Is it like this?

Well, actually: No.

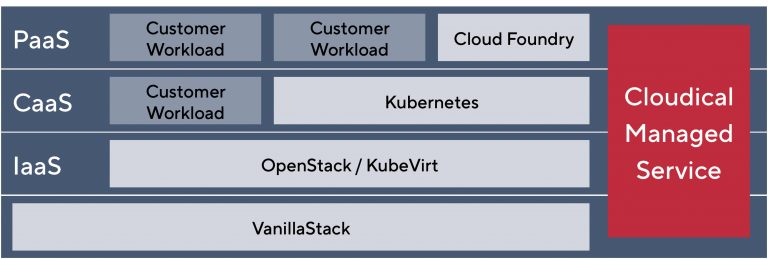

First, let’s clarify what we are talking about: A Cloud Foundry distribution, which is able to build, deploy and run workloads, which can provide databases and additional infrastructures as a service and which supports web- as well as CLI-based operations approaches.

SUSE Cloud Application Platform and Vanilla Cloud Foundry

Cloud Foundry exists in different flavors and distributions, with SUSE Cloud Application Platform and Vanilla Cloud Foundry being of interest to us, since they are designed to run on Kubernetes. This actually solves many, many issues with traditional environments and traditional Cloud Foundry distributions, since ultimately all workloads will be executed in containers by Kubernetes, orchestrated by Cloud Foundry and its rulesets.

A Cloud Foundry distribution can build software in a multitude of programming languages (C#, Java, Node, PHP, etc.), containerize it and bring it into production – without having to write build scripts or customizations. It detects the programming language, creates a build environment, executes the build and deploys the generated artifacts to the target environment – automatically, without manual intervention!

Furthermore, a Cloud Foundry distribution can operate deployed workloads. In its dashboard, one can define memory allocation, processor cores to be assigned, limits and boundaries – and all of this will be translated into logic for the underlying scheduler, which automatically operates the workloads based on these constraints. It can even scale up or down, based on simple and easily to maintain rulesets.

So, a Platform-as-a-Service-solution based on Cloud Foundry and Kubernetes can solve many, many issues one might have in regard to deployment and operations. It is not a carte-blanche, but it makes life way easier – and all of this without the need to manually intervene and customize. Therefore, it provides automated automation, and it will save a lot of money and resources, since dedicated engineers for defining and maintaining build-pipelines will not be required anymore. The potential for savings is enormous!

Managing and automating the underlying Infrastructure

But, the operational challenge with Cloud Foundry as Platform-as-a-Service-solution on Kubernetes still exists: It is complex to set-up, integrate and maintain.

Operational Dashboard of Grafana

A Cloud Foundry distribution will allow for simpler and more convenient deployments, databases as a service and is a step closer to the ideal of automated operations of workloads, but it cannot take away all the complexities in infrastructure management.

These complexities are still existent – and need to be handled. It requires an experienced and skilled team to set-up, automate and operate a combination of Kubernetes, Cloud Foundry, Storage and operational tools, not to be speaking of integrating them and to ensure an always working and breathing environment.

This can definitely be achieved by setting up a dedicated team – but it will imply mastering a very steep learning curve and will be expensive. 24/7 operations mean: Such a team will consist of five experts of Kubernetes, Cloud Foundry and related technologies. It is hard to find them, and they are expensive.

Therefore, a managed service, truly native with these technologies and approaches, would make a lot of sense – be it as a temporary or a permanent solution.

Managed PaaS

Fortunately, such a service is available!

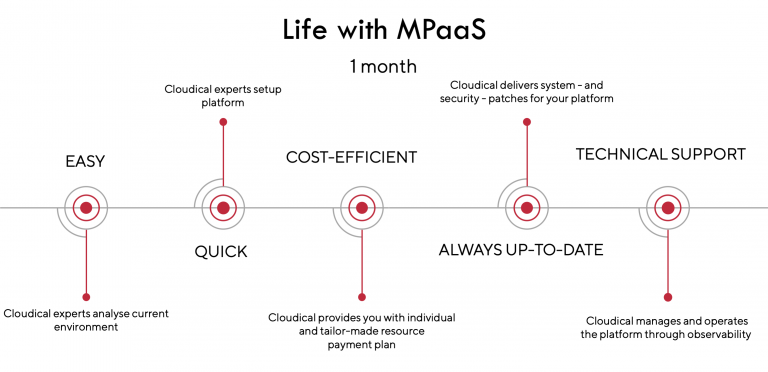

Cloudical Managed PaaS covers all aspects of sizing and setting up a working environment based on Kubernetes and SUSE’s Cloud Foundry platform. Our experts automate the platform, are responsible for 2nd day operations, provide support, patch and integrate updates, ensure security.

Cloudical Managed PaaS

Cloudical Managed PaaS is available on any platform, be it on-premises in your own datacenter, on your private cloud environment, with public clouds such as Noris Cloud, Oracle Cloud, IBM Cloud or OVHCloud or on hyper-scaler environments, such as AWS, Microsoft Azure or Google Cloud Platform. Our experts are skilled with the underlying platforms, roll the solution out within hours and provide up to 24/7 support – on any platform and any cloud!

With a simple and transparentcost structure, Cloudical Managed PaaS allows your ops-team to focus on maintaining your traditional environments or to grow into these sophisticated, cloud-native ecosystems, without you running into any risks in regard to operational excellence and problem solving, while being surprisingly affordable and always including all subscriptions- and licensing fees for the used software stack.

Managed PaaS is already trusted by multiple customers, based on open-source software and will cost you less than a dedicated operations team, regardless of 8/5 or 24/7 support- and operations-levels.

Relaxed deployment, relaxed operations

With a modern Infrastructure-as-a-Service-stack, Kubernetes as container platform, Cloud Foundry as Platform-as-a-Service-layer and Cloudical Managed PaaS as operations- and managed service-model, customers can achieve automated deployment, automated workload-operations and a completely sorrow-free platform-experience.

Ease of operations with Cloudical Managed PaaS

Usually, it takes weeks to months to set up such a complex operational ecosystem, and it even takes longer to master it – be it by manually automating it using traditional platforms such as Jenkins, or by utilizing the capabilities of Cloud Foundry.

A managed service which includes roll-out and operations of such a stack will make life way easier and save a lot of money and efforts. Integration into a sophisticated operational ecosystem on the one hand, and usage of battle-proven open-source frameworks and tools on the other hand, will add up to a complete end-to-end experience, which does not lock the customer into one ecosystem, but instead is open and able to run on literally any platform supporting at least containers.

This then proves to be a game-changer, allowing organizations to focus on what should be their core competency: Develop and / or run awesome workloads on awesome and reliable infrastructure.

You can find more about Cloudical Managed PaaS by visiting our website at https://cloudical.io/offerings/managed-paas or by getting in contact with us via Email to sales@cloudical.io.

Author:

Karsten Samaschke,

CEO Cloudical Deutschland GmbH

karsten.samaschke@cloudical.io

cloudical.io