In a world full of security threats and in a fully automated, fully scalable environment, security has to be one of the biggest concerns for developers. If it would not be, one would not only scale their applications, but their security issues as well…

In this article, we look at how to avoid from insecure applications from a process- and

automation point of view.

Security within a software development process

Security is never to be enforced by tools alone, it is a matter of mindset and approach. As with cloud-native applications and within cloud-environments, this holds even more true, since the nature of these applications and environments is a scaling one – if there were any security issues, they would and could be way more widespread, as with traditional, monolithic applications and within traditional, more separated environments.

The traditional approach

In traditional software development environments, security is typically understood as an additional layer, an additional step to be executed just before when an application would mature enough to be released. This would allow the developers to focus on their codes, which will be reviewed later on with a list of security issues and findings to be com- piled and to be eliminated.

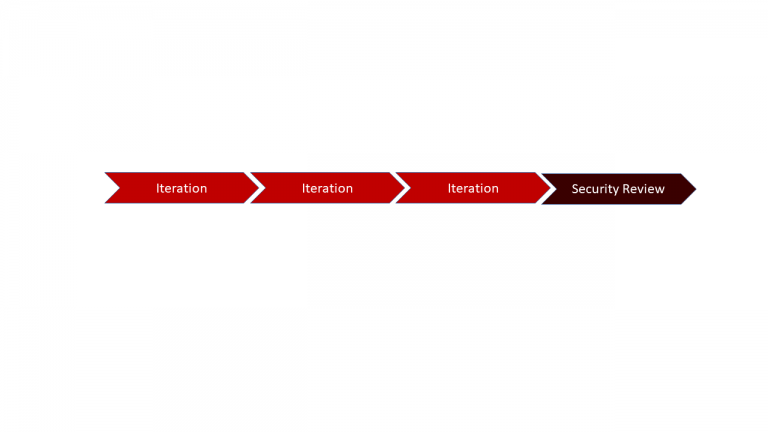

While this might work out for smaller, monolithic applications, it will not scale for cloud-native approaches with their continuous stream of new versions and releases. As an approach, it negates security too much by understanding it to be something additional, done by experts. Additionally, identifying and fixing issues at the end of a development process, tends to be very expensive and error-prone on its own (fig. 1). Therefore, it makes way more sense, to think of security and its place within a software development process differently.

Fig. 1: Timeline for security involvement in a traditional environment

The agile approach

Within agile and cloud-native projects, security has to be understood as something ordinary, something to be part of every iteration. Part of an agile and cloud-native mindset is, to have every stakeholder involved on a regular basis. As such, security experts are stakeholders to be involved within every iteration.

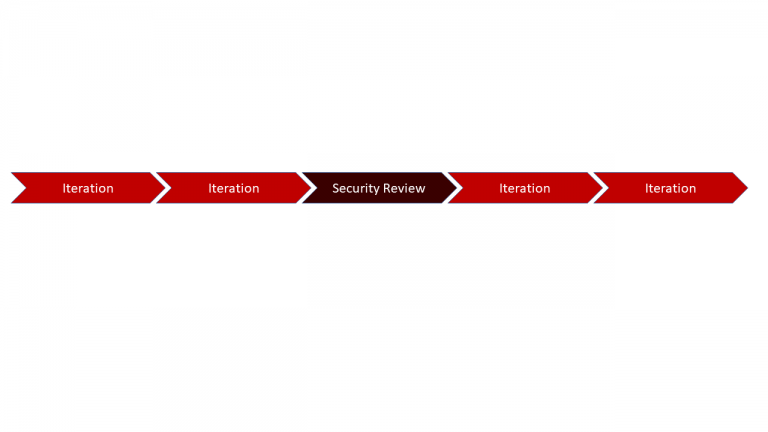

In an agile environment, this involvement would imply understanding security engineers and architects to be stakeholders or experts, not an integral part of the team. This allows for them to either contribute in form of regular, planned formal reviews every two or three iterations, or to be present during defined sprints and to give feedback then. They would therefore be a part of the project, without being involved all the time (fig. 2).

This approach solves a lot of issues, but is it applicable to cloud-native environments? The short answer is: No, since it will not help with the complexity of cloud-native applications and infrastructures.

Fig. 2: Timeline for security involvement in an agile environment

DevSecOps

For cloud-native environments and applications (but not limited to them), the above approach of working with specialists need to be stepped up, since iterations are way shorter, new versions are deployed way more often and the whole process of handling software is more complex than ever before.

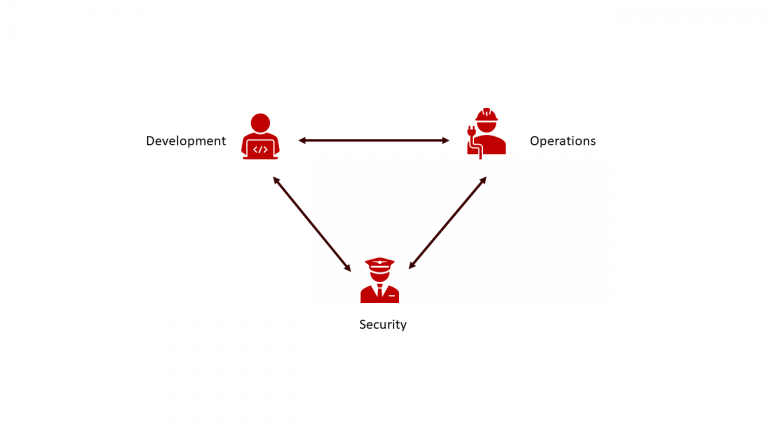

In such environments, DevOps is an improved kind of interaction between development and operations teams, allowing for far closer collaboration. Essentially, development and operations are working with each other all the time, ensuring fast and automated transition into operations. Each side is taking responsibility, acting jointly and implementing a holistic view on software and infrastructures. This allows for better time-to-market, better analysis

for performance- and functional issues, automated scalability and deeper integration of software and infrastructures. DevOps essentially is a mindset, to be lived and executed upon continuously.

The same holds true for security: It needs to become a mindset, it needs to be executed upon continuously – jointly with DevOps. The term “DevSecOps” reflects this. DevSecOps strives to implement security as being a part of an ongoing software development and release cycle. It implies involving security experts into development and operations processes, right from the start of a project. It is a mindset, developed to prevent software development and operations processes from stalling down due to security constraints. It enforces automation of security measures as well as constant involvement into the

product’s development and operations streams (fig. 3).

Fig. 3: DevSecOps Roles

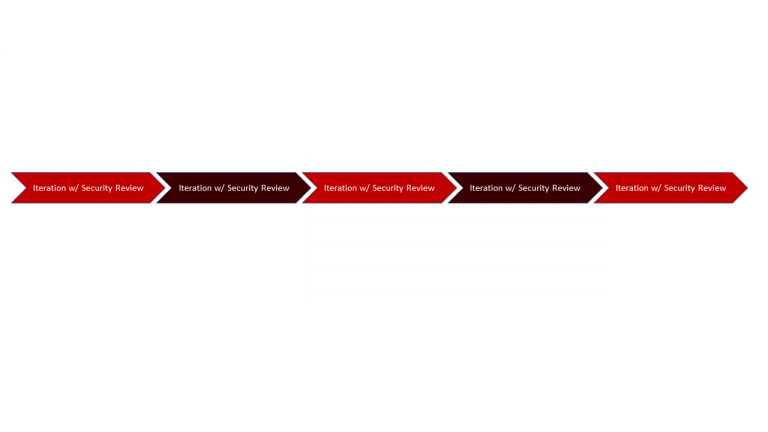

Ultimately, DevSecOps is a matter of culture. It implies understanding security as an integral part and not as an add-on or even as something unwanted. Security needs to be understood as part of development and operations pipelines, security experts need to be an integral and continuous part of any DevOps-team, security needs to be applied automatically (fig. 4).

Fig. 4: DevSecOps timeline

To start with DevSecOps, a team needs to ask and answer these questions:

– What amount of security measurements are required for a product?

– Which intrusion vectors exist and need to be mitigated?

– Where and how is data stored and encrypted?

– How can automation be achieved?

– How important is time-to-market compared to enforcing security for which software?

Answers need to be found continuously; the questions need to be evaluated continuously as well. Environments and software need to be understood as standardized, automated entities. Trustworthy pipelines and processes need to be established.

Trustworthy pipelines and processes

Trustworthiness is often perceived as security on a per-container basis, just as setting up and installing virus scanners or firewalls within these environments – but frankly: This would be complete nonsense, since it would affect performance on each every process of the application and since containers usually only expose HTTP- or HTTPS-ports. Additionally, using virus scanners and firewalls would not enforce security, but instead actually hampering it, since these tools would require a lot of permissions and would imply even bigger security risks and concerns per se.

No, there needs to be a different approach.

Security and trustworthiness within cloud-native environments on the container level needs to be set up using a secure and trustworthy path for every component to be deployed. This implies:

– No use of external, unverified containers (i.e. from Dock- er Hub)

– No use of external, unverified registries (i.e. Docker Hub)

No use of binary, unverified libraries for Java and other programming languages

– Each component to be used needs to be present as source code

Every built component can be stored in internal registries and repositories – but the only entrance into an environment is as source code only. Base container images and external components being referenced need to be managed and approved by a team being responsible for assessment only, involving security, development and infrastructure experts.

The rule of thumbs is: If it is not approved by that team, it does not exist for the developer.

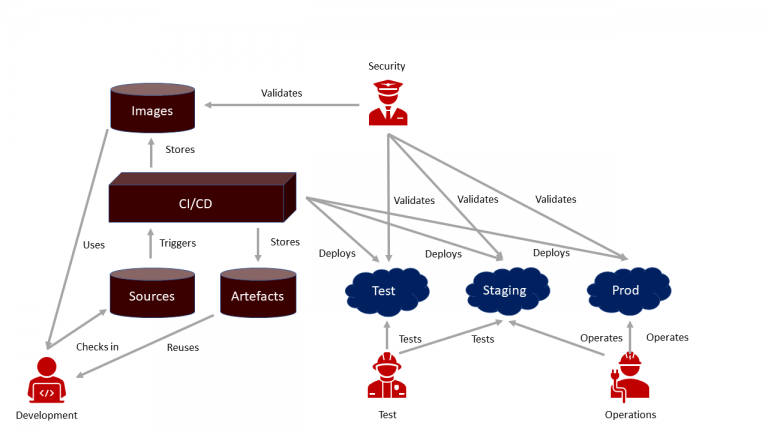

Within an environment, each and every build- and deployment-step needs to be executed in an automated way – there is no such thing as doing it by hand. A CI/CD-pipe- line needs to exist, usually set up using tools such as Jenkins CI or CloudFoundry.

Code to be used within the environment is only built, tested and deployed by these tools, not by the developer. Compiled code never leaves the developer’s private environment from a system’s perspective, only source code is to be pushed into a repository and picked up

from there by the CI/CD-pipeline.

This pipeline then builds code only once, storing generated artifacts and container images in their respective repositories. The same artifacts are to be deployed onto each and every environment – configurational differences are applied by external mechanisms, such as environmental variables (fig. 5).

Fig. 5: A CI/CD-pipeline

Permissions for developers and operators are another aspect of a trustworthy environment. Usually, most developers (and operators) claim god-like permissions for themselves, since they feel they understand environments and need to be in charge. But this is the completely wrong direction: Developers only need to have user-privileges, their software and services are not supposed to have more permissions than required for getting the job done – and even this needs to be reviewed and checked upon. This holds true for software running in traditional environments as well as for software running inside containers. As a rule, root access or elevated privileges for software should be subject to upfront investigations and to critical reception – initially, they should simply be denied!

As for operators, direct SSH-access to environments and infrastructures needs to be deniedas well. Configurational changes need to be scripted, they need to be versioned and to be rolled out automatically. Manual operations, manual adjustments and manual fixes need to be denied, every change and every addition to an environment is to be executed in a scripted, documented way. Permissions for scripts to be executed need to be as low as possible, passwords need to be eliminated – only SSH-keys (being deployed automatically) are allowed for accessing infrastructures. Tools, such as Ansible and Terraform are to be used extensibly, instead of relying on manual approaches. Centralized real-time logging is to be set up, operations need to be executed automatically utilizing this real-time data, instead of manually using dashboards and interpreting log file entries. Ultimately, this most often

implies a completely new approach on how to handle and operate environments, marking a change from manual operations towards an automated approach. But it is worth the effort, since it eliminates many of the traditional security and trust-issues within operational

environments.

DevSecOps and trustworthiness – a good start!

The depicted measurements allow for greater trustworthiness and higher security. If applied in conjunction with a DevSecOps process, they make up for a lean and secure solution.

Obviously, they can just be a starting point, since each organization has its own needs and requirements which need to be brought into the overall picture as well.

A complete approach in regard to a secure environment would emphasize on even more aspects, such as data management, data security, encryption and SSL-termination, update-cycles of base container images and components being used, a pro-active approach to security issues including penetration testing and monitoring of security bulletins, intrusion detection and a very strict rights and permission management.

The main takeaway is to be: Security starts with processes and trustworthiness of codes to be run in environments – but it does not end there at all. It is an ongoing process, including a lot of stakeholders and many aspects to be considered, and it needs to be executed upon from the very start of a software and infrastructure development project. If applied later on, it gets expensive and unmanageable, providing even more challenges and complexity when set up from the beginning.

And ultimately: It is a matter of mindset and approach, which needs to be understood as a

strategic aspect of each project, which must make it impossible to be ignored and to be

understood as an add-on.

Author:

Karsten Samaschke

Co-Founder and CEO of Cloudical

karsten.samaschke@cloudical.io

cloudical.io / cloudexcellence.io